Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- All Forums

- :

- QlikView App Dev

- :

- Circular reference error when using link tables

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Circular reference error when using link tables

Hi Qlik community,

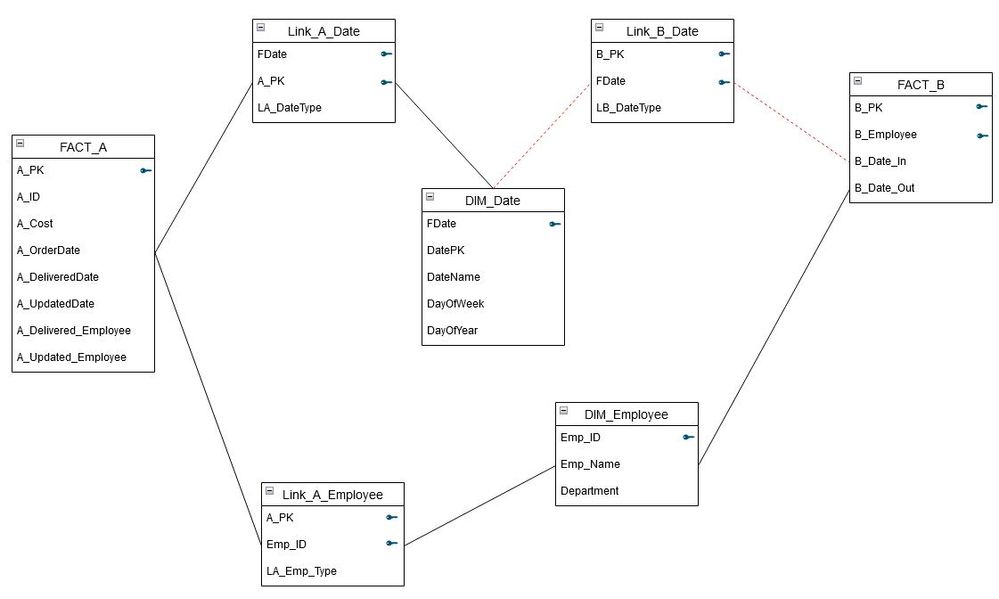

I need advice on how to tackle a circular reference when using link tables in my Qlik Sense. Please look at this sample data logical view:

I have loaded a few FACT and DIMENSION tables and associate them using data load editor. The FACT table, eg FACT_A, have multiple fields (date) that points back to a master DIMENSION date. I created a link table to address these multiple fields. Likewise for the employee DIMENSION too. This link table works perfectly.

However, the circular reference start to appear once the shared DIMENSION is being associated with different FACT. The dotted RED line is the circular reference error. Dimension modeling allows a DIMENSION table to be share across different FACT tables via foreign keys however Qlik seem to make this impossible with this circular reference.

What are the strategies to tackle this problem?

Note

- I need to maintain the link tables since a FACT can contain multiple dates (for example).

- DIMENSION tables will have to be shared with different FACT tables.

Thanks!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Qlik hasn't a relational data-model else an associative one and within the most scenarios it's not possible to share dimension-tables between multiple fact-tables unless specialized link-tables are created. That's hot loved from many people which come from the sql-world because it seems to be more logically for they.

But quite often it's not the most suitable choice in regard of efforts, simplicity and performance else it's the officially recommended star-scheme data-model with one (merged) fact-table and n dimension-tables. Therefore, I suggest you goes in this direction.

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Marcus, thanks for your reply.

Appreciate if you can provide more info.

You mentioned specialized link-tables. In this case, are the link tables in my logical diagram I shared are the specialized link-tables you mentioned? If yes, does that mean there is a way to correct the circular reference, disregarding effort, simplicity and performance? How should this be done, if applicable?

For your second paragraph, I can't wrap my head on merging FACT tables to n DIMENSIONS. FACT_A and FACT_B are containing non-related data, and highly possibly different grain. By merging the FACT tables, this oppose to dimension modelling of data granularity. How should such FACT tables be join in Qlik then? Could you share details (guides, tutorials, white paper, real life examples) for this?

Thanks.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Based on my understanding of using same date field you should do a self concatenate to the table itself to reduce the load and computation or you can create a composite key of the final link table and then use it to link to the other facts/dimension tables and same to the calendar.

Also, can you elaborate whats the reason behind keeping the fact_a, link_a_employee and fact_ b separate,as they can be reduced via concatenation.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

With specialized link-tables are merged (mostly by concatenating the distinct key-values from the fact-tables together) key-tables meant. Such table could become larger as the fact-tables itself and by larger data-sets it could slowdown the performance significantly.

Therefore the efforts are better used to merge (usually also per concatenate - this doesn't mean that various joins/mappings to clean/replace/fill data and/or to enrich any information are also useful) the fact-tables together. That a fact-table is in some degree asynchron because not all fields are shared between the facts is often no problem whereby all fields with the same content should be harmonized - in regard to the fieldnames as well as to the values.

For example there is a sales-table and a budget-table - both will have among other things an amount-field and a period-field and both will contain the same content and therefore there shouldn't be a field sales and another budget else just value - and to differentiate them you may apply an extra field KPI and/or you may use an extra field SOURCE. Quite similar by the period-field and should the tables having a different granularity like date for sales and months for budget the budget-period-value should be converted into a date, for example with something like: makedate(year, month).

In many scenarios such simple approaches will work very well and no other method will be easier and it would be quite hard to develop a more performant data-model (if it's possible it would be no link-table model).

- Marcus

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Also, can you elaborate whats the reason behind keeping the fact_a, link_a_employee and fact_ b separate,as they can be reduced via concatenation.

FACT_A is an Order table and FACT_B is an Employee table. There are more fields, this image is just an example. The actual FACT_A is around 40+ fields and FACT_B is 10+ fields.

For example there is a sales-table and a budget-table - both will have among other things an amount-field and a period-field and both will contain the same content and therefore there shouldn't be a field sales and another budget else just value - and to differentiate them you may apply an extra field KPI and/or you may use an extra field SOURCE. Quite similar by the period-field and should the tables having a different granularity like date for sales and months for budget the budget-period-value should be converted into a date, for example with something like: makedate(year, month).

How will this work, if you have FACT tables that do not measure same content?

I will appreciate if you have a real example, or a tutorial somewhere which can lead to your proposed strategy as it is hard for me to visualise.

Edit: If I have 15+ FACT tables, does that mean I concatenate all of it into one extremely long and wide table?

Thanks both!

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

It will be always depending on the data and their relationship as well as the resources within the environment and the requirements which kind of data-model is the most suitable one. There is no data-model approach which could be applied for all scenarios.

Beside of this the recommendation to de-normalize the data and to merge them in the less as possible fact-tables remained true and showed the direction in which the developing should go. The larger the dataset is especially in regard to the system-resources the more important becomes this method.

Like above mentioned the more or less simple concatenating of the fact-tables will work for many scenarios. But this doesn't mean that not much more measures might be necessary or at least sensible. This could mean to re-design many tables logically and to outsource various fact-dimensions into dimension-tables (sometimes even measures into dimension-tables). The concatenating is a vertical merging but quite often facts could also horizontally be merged per joins or in Qlik more relevant per mapping (is much faster as joins, has no risks in regard to the relationship and changing the number of records, could be in various ways nested and is therefore extremely flexible and powerful).

That there is in the end a quite long and wide table is not mandatory a problem because of the way how Qlik stored and processed the data (always distinct values per field within system-tables which are linked with a bit-stuffed pointer to the data-table). Very important to the performance of Qlik is the number of distinct field-values and their formatting. Without (not needed) record-id's or granular timestamps (could be divided into dates and times) or similar things quite large datasets could become quite small in regard to their RAM consumption. And because of the fact that NULL won't be stored asynchronous tables are no issue.

If your real case is with 15+ of fact-tables is larger and more complex as hinted within the origin posting will of course require much more efforts to design a data-model but it doesn't change the above suggestions. Everything else won't be easier else be the opposite. IMO the Qlik logic is so easy and simple that many from the SQL world couldn't believe that such simple approaches could work, are really good working and therefore trying all possible more complex things ... therefore just give it a try.

- Marcus