Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Data Integration

- :

- Qlik

- :

- Qlik Replicate

- :

- Re: Unexpected error encountered filling record re...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Unexpected error encountered filling record reader buffer: HadoopExecutionException

Unexpected error encountered filling record reader buffer: HadoopExecutionException: Too long string in column [-1]: Actual len = [19]. MaxLEN=[12] Line: 1 Column: -1

this error doesn't mention exactly which column is causing issue. At this situation what should be done? Any details would be appreciated.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi Suvbin,

Sorry, you are having trouble! It sounds like the Source and Target are out of sync and the DDL does not match. Can you have the source_capture and target_apply set to VERBOSE? Also, can you check the apply_exceptions table in the Target? As we would need to investigate further what is happening we highly recommend that you do open a support case. Make sure to attach all relevant information when opening it.

Let us know if you have any other questions!

Thanks,

David

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi,

Thank you for the post to the QDI forums. For debugging data related issues and the buffer being passed between the Source and Target you want to turn logging SOURCE_CAPTURE and TARGET_APPLY to VERBOSE as this will show the command and the data being passed. Also you can set the keepCSVFiles on the Targets to analysis the data being read from the Source that is being pushed to Hadoop.

Note: If a support case is opened and its the 2022.5 version or above make sure you include the log.key file from the Task directory to decrypt the log files.

Thank!

Bill

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

I am seeing same issue. What was the solution for this issue? I don't see DDL mismatch as well.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hello @SamJebaraj ,

The failure is due to the row size of this Column defined as the Max 12, but the input is 19 which is larger than the Hadoop Target Definition. To further understand the reason please:

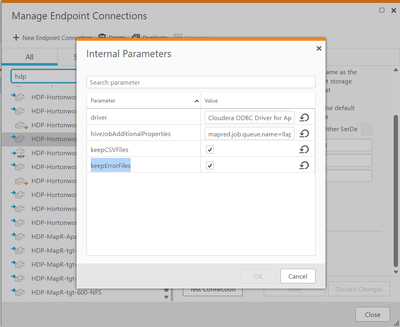

1. In target endpoint add internal parameter "keepCSVFiles" and "keepErrorFiles", set them to TRUE

After the problem is reproduced, check the generated .CSV files to see which values are too large to load. The files locate under folder "applied_files" and "data_files" in task subfolder, default location at Windows is "C:\Program Files\Attunity\Replicate\data\tasks\<Task Name>".

Sample of the setting:

2. If the problem occurs in CDC stage then please turn logging SOURCE_CAPTURE and TARGET_APPLY to VERBOSE as Bill's suggestion above, and check task log files to understand the reason.

Please take note to decrypt the task log files otherwise the columns data are encrypted and are masking.

3. Check the source side and target side table structure to see if the columns length is match, include the column collation to make the target side could accept the source side data max length.

4. If you need additional assistance please open the support ticket and attach above information to salesforce portal case (please do not attach them here in this article as it's public for all users).

Hope this helps.

Regards,

John.