Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Forums

- :

- Analytics

- :

- New to Qlik Analytics

- :

- Re: Stumped on Merging to Tables with Start and En...

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Stumped on Merging to Tables with Start and End Rows

Hi All

I have two tables. One from the QlikSense Logs which denotes the start and end of a session and one from our front end web application that tracks the users movement through our analytics app.

What Im trying to do is map the flow through our site - but first I need to 'combine' these two table to insert the 'Open' and 'Close' records in the correct places in the Pages table.

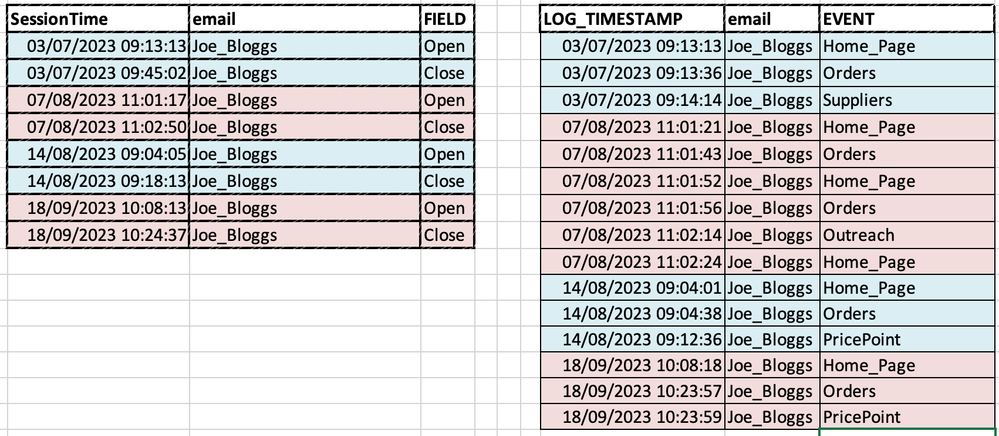

I hope this image describes what Im after :

On the left is the table that denotes the Start and End of the sessions (Open & Close) by user and Timestamp

On the right is the table that shows the flow of the User through our app timestamp. The initial starting place for all sessions is Home_Page but unfortunately users can go back there at any point during the session (eg, 07/08/2023).

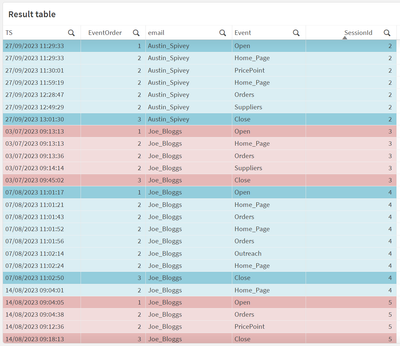

What Id ideally like to get to is this :

I have no idea of how to tackle this so any suggestions really very appreciated!

Many thanks

Dai

Accepted Solutions

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should be able to achieve this by using some math, proper table sorting, and a join.

Here's the script I used:

// STEP 1

[FieldMap]:

Mapping Load * Inline [

old , new

Open , 1

Close , 3

];

// STEP 2

[data]:

Load * Inline [

SessionTime , email , FIELD

03/07/2023 09:13:13 , Joe_Bloggs , Open

03/07/2023 09:13:28 , Austin_Spivey , Open

03/07/2023 09:32:26 , Austin_Spivey , Close

03/07/2023 09:45:02 , Joe_Bloggs , Close

07/08/2023 11:01:17 , Joe_Bloggs , Open

07/08/2023 11:02:50 , Joe_Bloggs , Close

14/08/2023 09:04:05 , Joe_Bloggs , Open

14/08/2023 09:17:21 , Austin_Spivey , Open

14/08/2023 09:18:13 , Joe_Bloggs , Close

14/08/2023 10:11:59 , Austin_Spivey , Close

18/09/2023 10:08:13 , Joe_Bloggs , Open

18/09/2023 10:24:37 , Joe_Bloggs , Close

27/09/2023 11:29:33 , Austin_Spivey , Open

27/09/2023 13:01:30 , Austin_Spivey , Close

];

[data1]:

Load

[SessionTime] as [TS]

, [email]

, [FIELD] as [Event]

, Div(RowNo() - 1, 2) as [SessionId] // STEP 3

, ApplyMap('FieldMap', [FIELD]) as [EventOrder] // STEP 4

Resident [data]

Order By [email], [SessionTime] asc // STEP 5

;

// STEP 6

Drop Table [data];

Join ([data1]) // STEP 9

Load

[LOG TIMESTAMP] as [TS]

, [email]

, [EVENT] as [Event]

, 2 as [EventOrder] // STEP 8

;

// STEP 7

Load * Inline [

LOG TIMESTAMP , email , EVENT

03/07/2023 09:13:13 , Joe_Bloggs , Home_Page

03/07/2023 09:13:28 , Austin_Spivey , Home_Page

03/07/2023 09:13:49 , Austin_Spivey , PricePoint

03/07/2023 09:15:10 , Austin_Spivey , Home_Page

03/07/2023 09:20:13 , Austin_Spivey , Suppliers

03/07/2023 09:29:20 , Austin_Spivey , Home_Page

03/07/2023 09:13:36 , Joe_Bloggs , Orders

03/07/2023 09:14:14 , Joe_Bloggs , Suppliers

07/08/2023 11:01:21 , Joe_Bloggs , Home_Page

07/08/2023 11:01:43 , Joe_Bloggs , Orders

07/08/2023 11:01:52 , Joe_Bloggs , Home_Page

07/08/2023 11:01:56 , Joe_Bloggs , Orders

07/08/2023 11:02:14 , Joe_Bloggs , Outreach

07/08/2023 11:02:24 , Joe_Bloggs , Home_Page

14/08/2023 09:04:01 , Joe_Bloggs , Home_Page

14/08/2023 09:04:38 , Joe_Bloggs , Orders

14/08/2023 09:12:36 , Joe_Bloggs , PricePoint

14/08/2023 09:17:21 , Austin_Spivey , Home_Page

14/08/2023 09:17:30 , Austin_Spivey , Orders

14/08/2023 09:28:00 , Austin_Spivey , Suppliers

14/08/2023 09:31:04 , Austin_Spivey , Orders

14/08/2023 09:50:50 , Austin_Spivey , Home_Page

14/08/2023 10:01:20 , Austin_Spivey , PricePoint

18/09/2023 10:08:18 , Joe_Bloggs , Home_Page

18/09/2023 10:23:57 , Joe_Bloggs , Orders

18/09/2023 10:23:59 , Joe_Bloggs , PricePoint

27/09/2023 11:29:33 , Austin_Spivey , Home_Page

27/09/2023 11:30:01 , Austin_Spivey , PricePoint

27/09/2023 11:59:19 , Austin_Spivey , Home_Page

27/09/2023 12:28:47 , Austin_Spivey , Orders

27/09/2023 12:49:29 , Austin_Spivey , Suppliers

];

[data2]:

NoConcatenate Load // STEP 12

[TS]

, [email]

, [Event]

, Coalesce([SessionId], Peek([SessionId])) as [SessionId] // STEP 10

, [EventOrder]

Resident [data1]

Order By [email], [TS] asc, [EventOrder] asc // STEP 11

;

// STEP 13

Drop Table [data1];

Here are the steps I followed, which line up to the // STEP comments I added in the script:

- Create a mapping table using the Mapping keyword that will map the values "Open" to 1 and "Close" to 3. We do this because we ultimately want for the "Open" events to always be the first event in a user's session and we always want "Close" events to be the last event in a user's session.

- In step 2 I load a table with sample data, showing Open and Close events for 2 users.

- Here, I am creating a new table that is loaded from the table above. I use the RowNo() function to get the row number of the current row and then the Div() function to perform some basic math that results in every two rows having the same number. I name this new field [SessionId] because this will ultimately help us identify the individual sessions (i.e. user1-session1 = open...events...close; user2-session1 = open...events...close; etc.).

- I then create a new field called [EventOrder] that uses our mapping table from step 1 to change Open to 1 and Close to 3.

- The last step for this table is to have it ordered by user and session time so that our [SessionId] field is created correctly, as it uses that RowNo() function which is dependent on ordering. We use the Order By keyword to do this.

- Here we simply drop our original table since we won't need it any longer.

- For step 7, we load our second example table that includes session events for those two users.

- Using our new sample table in a preceding load, we make a table that renames our fields. We create the [EventOrder] field which is just the number 2, since we want these events to always between the Open and Close events, which you'll recall are mapped to 1 and 3, respectively.

- We add the Join keyword here to perform an Outer Join to our previous "Open/Close" table.

- In a new table that's created from our newly-joined "main" table, we use the Coalesce() function to check for rows with a NULL for the [SessionId] field. If they are NULL, then we use the Peek() function to "peek" at the row above to get the [SessionId] from there. We do all of this so that we can match the Open/Close sessions to the events that happen during those sessions.

- In order for the above step to work, we have to order our table user, timestamp, and event order.

- We also need to use the NoConcatenate keyword here so that we don't accidentally concatenate this new table with the previous one. This can happen when you try to load a table that has the same field names and same number of fields as another loaded table.

- Finally, drop the previous table as we no longer need it.

This should now give you the result you need:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

hi

may be something like this

temp:

load

timestamp(SessionTime,'DD/MM/YYYY hh:mm:ss.fff') as LOG_TIMESTAMP

email,

FIELD as EVENT

from ... yoursource1

concatenate

load

timestamp(LOG_TIMESTAMP,'DD/MM/YYYY hh:mm:ss.fff') as LOG_TIMESTAMP

email,

EVENT

from yoursource2;

Final:

No concatenate

Load *

resident temp order by email,LOG_TIMESTAMP (asc) ;

drop table temp;

Here i see OPEN can be at the same time than first EVENT

if your field LOG_TIMESTAMP gets miliseconde it should order correctly , if not ( mean miliseconde are rounded , the trick should remove some dummy milliseconde to your first LOG_TIMESTAMP when the value of Field is 'OPEN'

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

You should be able to achieve this by using some math, proper table sorting, and a join.

Here's the script I used:

// STEP 1

[FieldMap]:

Mapping Load * Inline [

old , new

Open , 1

Close , 3

];

// STEP 2

[data]:

Load * Inline [

SessionTime , email , FIELD

03/07/2023 09:13:13 , Joe_Bloggs , Open

03/07/2023 09:13:28 , Austin_Spivey , Open

03/07/2023 09:32:26 , Austin_Spivey , Close

03/07/2023 09:45:02 , Joe_Bloggs , Close

07/08/2023 11:01:17 , Joe_Bloggs , Open

07/08/2023 11:02:50 , Joe_Bloggs , Close

14/08/2023 09:04:05 , Joe_Bloggs , Open

14/08/2023 09:17:21 , Austin_Spivey , Open

14/08/2023 09:18:13 , Joe_Bloggs , Close

14/08/2023 10:11:59 , Austin_Spivey , Close

18/09/2023 10:08:13 , Joe_Bloggs , Open

18/09/2023 10:24:37 , Joe_Bloggs , Close

27/09/2023 11:29:33 , Austin_Spivey , Open

27/09/2023 13:01:30 , Austin_Spivey , Close

];

[data1]:

Load

[SessionTime] as [TS]

, [email]

, [FIELD] as [Event]

, Div(RowNo() - 1, 2) as [SessionId] // STEP 3

, ApplyMap('FieldMap', [FIELD]) as [EventOrder] // STEP 4

Resident [data]

Order By [email], [SessionTime] asc // STEP 5

;

// STEP 6

Drop Table [data];

Join ([data1]) // STEP 9

Load

[LOG TIMESTAMP] as [TS]

, [email]

, [EVENT] as [Event]

, 2 as [EventOrder] // STEP 8

;

// STEP 7

Load * Inline [

LOG TIMESTAMP , email , EVENT

03/07/2023 09:13:13 , Joe_Bloggs , Home_Page

03/07/2023 09:13:28 , Austin_Spivey , Home_Page

03/07/2023 09:13:49 , Austin_Spivey , PricePoint

03/07/2023 09:15:10 , Austin_Spivey , Home_Page

03/07/2023 09:20:13 , Austin_Spivey , Suppliers

03/07/2023 09:29:20 , Austin_Spivey , Home_Page

03/07/2023 09:13:36 , Joe_Bloggs , Orders

03/07/2023 09:14:14 , Joe_Bloggs , Suppliers

07/08/2023 11:01:21 , Joe_Bloggs , Home_Page

07/08/2023 11:01:43 , Joe_Bloggs , Orders

07/08/2023 11:01:52 , Joe_Bloggs , Home_Page

07/08/2023 11:01:56 , Joe_Bloggs , Orders

07/08/2023 11:02:14 , Joe_Bloggs , Outreach

07/08/2023 11:02:24 , Joe_Bloggs , Home_Page

14/08/2023 09:04:01 , Joe_Bloggs , Home_Page

14/08/2023 09:04:38 , Joe_Bloggs , Orders

14/08/2023 09:12:36 , Joe_Bloggs , PricePoint

14/08/2023 09:17:21 , Austin_Spivey , Home_Page

14/08/2023 09:17:30 , Austin_Spivey , Orders

14/08/2023 09:28:00 , Austin_Spivey , Suppliers

14/08/2023 09:31:04 , Austin_Spivey , Orders

14/08/2023 09:50:50 , Austin_Spivey , Home_Page

14/08/2023 10:01:20 , Austin_Spivey , PricePoint

18/09/2023 10:08:18 , Joe_Bloggs , Home_Page

18/09/2023 10:23:57 , Joe_Bloggs , Orders

18/09/2023 10:23:59 , Joe_Bloggs , PricePoint

27/09/2023 11:29:33 , Austin_Spivey , Home_Page

27/09/2023 11:30:01 , Austin_Spivey , PricePoint

27/09/2023 11:59:19 , Austin_Spivey , Home_Page

27/09/2023 12:28:47 , Austin_Spivey , Orders

27/09/2023 12:49:29 , Austin_Spivey , Suppliers

];

[data2]:

NoConcatenate Load // STEP 12

[TS]

, [email]

, [Event]

, Coalesce([SessionId], Peek([SessionId])) as [SessionId] // STEP 10

, [EventOrder]

Resident [data1]

Order By [email], [TS] asc, [EventOrder] asc // STEP 11

;

// STEP 13

Drop Table [data1];

Here are the steps I followed, which line up to the // STEP comments I added in the script:

- Create a mapping table using the Mapping keyword that will map the values "Open" to 1 and "Close" to 3. We do this because we ultimately want for the "Open" events to always be the first event in a user's session and we always want "Close" events to be the last event in a user's session.

- In step 2 I load a table with sample data, showing Open and Close events for 2 users.

- Here, I am creating a new table that is loaded from the table above. I use the RowNo() function to get the row number of the current row and then the Div() function to perform some basic math that results in every two rows having the same number. I name this new field [SessionId] because this will ultimately help us identify the individual sessions (i.e. user1-session1 = open...events...close; user2-session1 = open...events...close; etc.).

- I then create a new field called [EventOrder] that uses our mapping table from step 1 to change Open to 1 and Close to 3.

- The last step for this table is to have it ordered by user and session time so that our [SessionId] field is created correctly, as it uses that RowNo() function which is dependent on ordering. We use the Order By keyword to do this.

- Here we simply drop our original table since we won't need it any longer.

- For step 7, we load our second example table that includes session events for those two users.

- Using our new sample table in a preceding load, we make a table that renames our fields. We create the [EventOrder] field which is just the number 2, since we want these events to always between the Open and Close events, which you'll recall are mapped to 1 and 3, respectively.

- We add the Join keyword here to perform an Outer Join to our previous "Open/Close" table.

- In a new table that's created from our newly-joined "main" table, we use the Coalesce() function to check for rows with a NULL for the [SessionId] field. If they are NULL, then we use the Peek() function to "peek" at the row above to get the [SessionId] from there. We do all of this so that we can match the Open/Close sessions to the events that happen during those sessions.

- In order for the above step to work, we have to order our table user, timestamp, and event order.

- We also need to use the NoConcatenate keyword here so that we don't accidentally concatenate this new table with the previous one. This can happen when you try to load a table that has the same field names and same number of fields as another loaded table.

- Finally, drop the previous table as we no longer need it.

This should now give you the result you need:

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Is it expected that the 14/08 at 09:04:01 you have an homepage event with an opening at 09:04:05?

If this is an error, a basic concatenate between the 2 tables should do the job. And eventually, once sorted by timestamp and using Previous() and Peek(), you can create an identifier for each session.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

can just add an if statement while loading itself

tab1:

load "LOG TIMESTAMP" as LOGTIMESTAMP,email,EVENT,1 as Order;

Load * Inline [

LOG TIMESTAMP , email , EVENT

03/07/2023 09:13:13 , Joe_Bloggs , Home_Page

03/07/2023 09:13:28 , Austin_Spivey , Home_Page

03/07/2023 09:13:49 , Austin_Spivey , PricePoint

03/07/2023 09:15:10 , Austin_Spivey , Home_Page

03/07/2023 09:20:13 , Austin_Spivey , Suppliers

03/07/2023 09:29:20 , Austin_Spivey , Home_Page

03/07/2023 09:13:36 , Joe_Bloggs , Orders

03/07/2023 09:14:14 , Joe_Bloggs , Suppliers

07/08/2023 11:01:21 , Joe_Bloggs , Home_Page

07/08/2023 11:01:43 , Joe_Bloggs , Orders

07/08/2023 11:01:52 , Joe_Bloggs , Home_Page

07/08/2023 11:01:56 , Joe_Bloggs , Orders

07/08/2023 11:02:14 , Joe_Bloggs , Outreach

07/08/2023 11:02:24 , Joe_Bloggs , Home_Page

14/08/2023 09:04:01 , Joe_Bloggs , Home_Page

14/08/2023 09:04:38 , Joe_Bloggs , Orders

14/08/2023 09:12:36 , Joe_Bloggs , PricePoint

14/08/2023 09:17:21 , Austin_Spivey , Home_Page

14/08/2023 09:17:30 , Austin_Spivey , Orders

14/08/2023 09:28:00 , Austin_Spivey , Suppliers

14/08/2023 09:31:04 , Austin_Spivey , Orders

14/08/2023 09:50:50 , Austin_Spivey , Home_Page

14/08/2023 10:01:20 , Austin_Spivey , PricePoint

18/09/2023 10:08:18 , Joe_Bloggs , Home_Page

18/09/2023 10:23:57 , Joe_Bloggs , Orders

18/09/2023 10:23:59 , Joe_Bloggs , PricePoint

27/09/2023 11:29:33 , Austin_Spivey , Home_Page

27/09/2023 11:30:01 , Austin_Spivey , PricePoint

27/09/2023 11:59:19 , Austin_Spivey , Home_Page

27/09/2023 12:28:47 , Austin_Spivey , Orders

27/09/2023 12:49:29 , Austin_Spivey , Suppliers

];

join(tab1)

tab:

load SessionTime as LOGTIMESTAMP,email,FIELD as EVENT,if(FIELD='Open',0,2) as Order;

Load * Inline [

SessionTime , email , FIELD

03/07/2023 09:13:13 , Joe_Bloggs , Open

03/07/2023 09:13:28 , Austin_Spivey , Open

03/07/2023 09:32:26 , Austin_Spivey , Close

03/07/2023 09:45:02 , Joe_Bloggs , Close

07/08/2023 11:01:17 , Joe_Bloggs , Open

07/08/2023 11:02:50 , Joe_Bloggs , Close

14/08/2023 09:04:05 , Joe_Bloggs , Open

14/08/2023 09:17:21 , Austin_Spivey , Open

14/08/2023 09:18:13 , Joe_Bloggs , Close

14/08/2023 10:11:59 , Austin_Spivey , Close

18/09/2023 10:08:13 , Joe_Bloggs , Open

18/09/2023 10:24:37 , Joe_Bloggs , Close

27/09/2023 11:29:33 , Austin_Spivey , Open

27/09/2023 13:01:30 , Austin_Spivey , Close

];

NoConcatenate

tab2:

load LOGTIMESTAMP,email,EVENT

resident tab1

order by email,LOGTIMESTAMP,Order;

drop table tab1;

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Amazing and detailed response - thanks so much

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Hi @AustinSpivey - thanks again for your previous solution. If I may, Id like to ask another question. Is it possible to order the events from 1 : open and then increment +1 for each row until we hit the 'close'. So we would end up with, for example

| User | Event | Flow | TimeStamp | SessionID |

| Joe Bloggs | Open | 1 | 20/02/2024 09:20:44 | 1 |

| Joe Bloggs | HomePage | 2 | 20/02/2024 09:21:20 | 1 |

| Joe Bloggs | Orders | 3 | 20/02/2024 09:24:12 | 1 |

| Joe Bloggs | Price Point | 4 | 20/02/2024 09:28:01 | 1 |

| Joe Bloggs | Close | 5 | 20/02/2024 09:31:30 | 1 |

| Jane Doe | Open | 1 | 20/02/2024 10:15:03 | 2 |

| Jane Doe | HomePage | 2 | 20/02/2024 10:17:40 | 2 |

| Jane Doe | Close | 3 | 20/02/2024 10:19:50 | 2 |

Many thanks once again.

Dai