Unlock a world of possibilities! Login now and discover the exclusive benefits awaiting you.

- Qlik Community

- :

- Support

- :

- Support

- :

- Knowledge

- :

- Support Articles

- :

- Qlik Talend Data Integration: How to use the Bulk ...

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Qlik Talend Data Integration: How to use the Bulk Copy API for batch insert operations for tJDBCOutput in Spark Job for SQLServer

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

Qlik Talend Data Integration: How to use the Bulk Copy API for batch insert operations for tJDBCOutput in Spark Job for SQLServer

Apr 17, 2024 6:24:25 AM

Apr 17, 2024 6:24:25 AM

To take advanced of Batch Size provided performance boost on SQLserver, please make sure using sqlserver jdbc version > 9.2

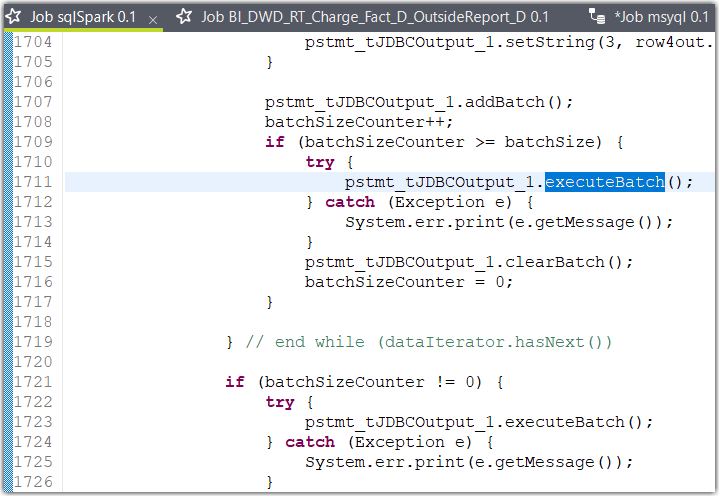

Microsoft JDBC Driver for SQL Server version 9.2 and above supports using the Bulk Copy API for batch insert operations. This feature allows users to enable the driver to do Bulk Copy operations underneath when executing batch insert operations. The driver aims to achieve improvement in performance while inserting the same data as the driver would have with regular batch insert operation. The driver parses the user's SQL Query, using the Bulk Copy API instead of the usual batch insert operation. Below are various ways to enable the Bulk Copy API for batch insert feature and lists its limitations. This page also contains a small sample code that demonstrates a usage and the performance increase as well.

This feature is only applicable to PreparedStatement and CallableStatement's executeBatch() & executeLargeBatch() APIs.and our tJDBCOutput "Use Batch" take advantage of executeBatch()

Related Content

Using bulk copy API for batch insert operation